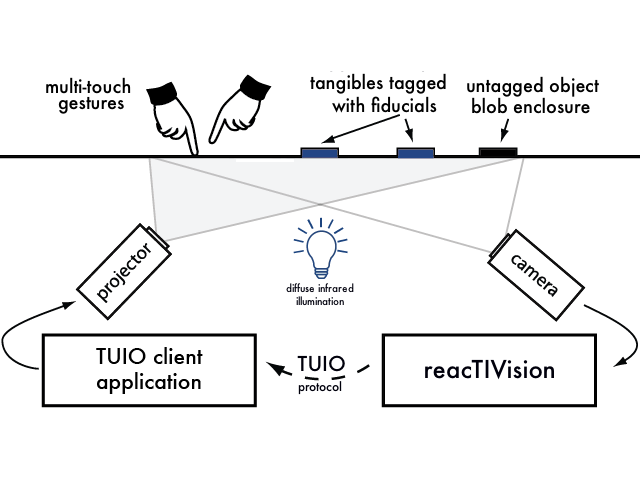

I saw an amazing performance on Saturday, using reacTIVision. The performer had set up his lightbox with a small series of strings on one side as the source of sound. He placed a series of small tiles, which had images known as fiducials on the undersides, on top of the box. The tiles are then 'read' by a camera within the lightbox. The camera picks up the images and the computer distorts the noise accordingly. The performer in question had only fully 'programmed' a couple of the tiles, a few loops essentially, so that the rest of the sounds were less controlled, more unexpected, creating an improvised set, as it were.

It was fascinating to watch the mixture of control and arbitrary noise created by the fiducials being moved on the surface of the box. Even more incredible is the process of programming behind it. It all gets a bit technical for me, relying on the identifying systems set up within the computer to match and calculate the unique ID of the fiducial and then "encode the fiducial's presence, location, orientation and identity and transmit this data to the client applications". It's a great example of realtime at work: such as Wil Wheaton's current experiments in webcam feeds, and similarly it's bound by the technology it relies upon, but with fantastic implications. It could be expanded endlessly, created as a projection, and can employ finger-tracking as well (don't even ask, I've not looked). This has expanded live mixing in my mind: I was always better with grasping a system of symbols. This could be the next step, not only for literal noise experiment, but for dance, for all music performance.

A wonderful meeting of art and technology, which I lurve. If only I could understand either.

No comments:

Post a Comment